Many tools and applications publish their docker images to docker hub and other public repositories, and many of them supply a docker-compose file to help with a boiler plate deployment. Those are helpful when you are playing around with the applications/tools to figure out how it can be best utilized to solve the problems that you are trying to solve.

Running a containerized app in your workstation is just easy as running a docker run command. But more often than not everyone would like to see the tool/application running on a cloud platform, like AWS ECS. I met with this situation and thought I would ignore the docker-compose file and spin up an ECS cluster myself. But then learned docker-compose has a native ECS integration. And further googling lead me to these two AWS blogs;

- Deploy applications on Amazon ECS using Docker Compose

- Automated software delivery using Docker Compose and Amazon ECS

So I decided to give it a go, and described below is my learnings from the experiment.

The Beginning

The ECS-integration documentation suggests an ECS cluster running a nginx service that has an EFS volume attached could be spin up using a docker-compose file as below.

services:

nginx:

image: nginx

volumes:

- mydata:/some/container/path

volumes:

mydata:

I thought that would be too simple an example to experiment, so I choose an ELK stack docker-compose file from the Awesome docker-compose github repo. Made a few changes the downloaded file as in this commit, installed docker-compose CLI and tried to deploy it.

docker context create ecs demoecs --from-env # AWS credentials are set in env variables

docker context use demoecs

docker --debug compose -f elastic-docker-compose.yaml up

Met with an error straight away;

ECS Fargate does not support bind mounts from host: incompatible attribute

But that is an easy fix, I just need change the instruction to use EFS instead of bind mount.

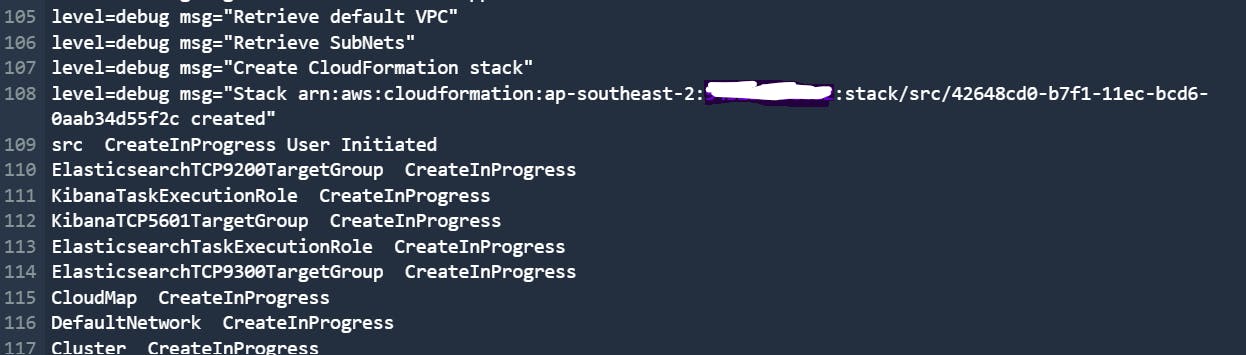

Fixed it this commit and run the docker compose up again. This time it looked more promising and the logs looked like docker compose is now deploying a cloudformation stack.

So under the hood, docker-compose is building a cloudformation template from the compose file and deploying it.

So under the hood, docker-compose is building a cloudformation template from the compose file and deploying it.

The stack was getting deployed to the default vpc as per the log, and that is not something I wanted. But I let the deployment to continue. The stack appeared to be going okay, but the ECS tasks were not successfully coming up. In each of the three services within the ECS cluster, the tasks were moving to 'STOPPED' stage from 'PENDING', with error like

*KibanaService TaskFailedToStart: CannotPullContainerError: inspect image has been retried 5 time(s): failed to resolve ref "docker.io/docker/ecs-searchdomain-sidecar:1.0": failed to do request: Head registry-1.docker.io/v2/docker/ecs-searchdo..

The docker-compose file doesn't have any reference to any images other than Elasticsearch, Logstash or Kibana, so where did the ecs-searchdomain-sidecar image come into picture? Must be a docker-compose magic to include sidecars with the task definitions. The fact that it was trying to deploy the stack to the default VPC probably explains the connectivity issue (unable to pull container). Not all subnets in my default vpc has Internet outbound. Since I didn't want to deploy the ECS cluster in my default VPC anyway, I should be good if I can explicitly instruct docker compose to use a VPC instead of letting it select the default. The trick is to add x-aws-vpc at the top level of the docker-compose file.

x-aws-vpc: vpc-89768976kjdafad798

Another thing I noticed is that the ECS cluster was created with the name as 'src', not a helpful name. A bit more fishing in the documentation suggested to provide an explicit docker-compose project name in with the docker-compose command. So the my compose file was changed to begin as shown below

version: 3

x-aws-vpc: vpc-89768976kjdafad798

services:

elasticsearch:

................

.....

and the docker-compose command was changed to provide explicit project name

docker --debug compose -p elk-ecs -f elastic-docker-compose.yaml up

The Next attempt

With the changes in place, I did another deploy (docker-compose up). And things were looking good, but only until I noticed an error in the logs as below;

LoadBalancer CreateFailed A load balancer cannot be attached to multiple subnets in the same Availability Zone (Service: AmazonElasticLoadBalancing; Status Code: 400; Error Code: InvalidConfigurationRequest; Request ID: f263ebe1-4d7d-43dc-be77-3c6b9196f059; Proxy: null)

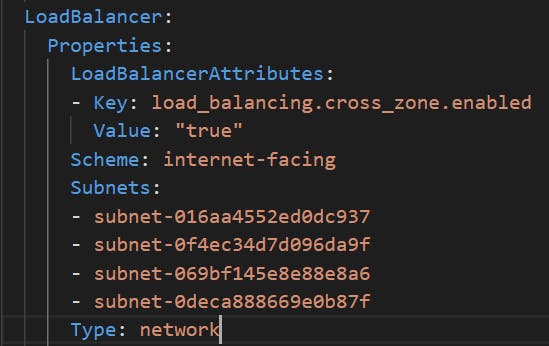

Well, that is confusing. My VPC is a simple one with two public subnets and two private subnets, across two availability zones. The load balancer is supposed to be in the public subnets and there aren't any overlapping subnets for me to bump into this error. The docker-compose log wouldn't tell anything beyond this, so I decided to take a look at the cloudformation generated by docker-compose. One can ask docker-compose to generate the cloudformation by running

docker compose convert > efn-cloudformation.yaml

(where efn-cloudformation.yaml is the name of the output file) And the Load balancer code came out as

So, by default it is creating a network load balancer and placing it across all the subnets in the VPC, private subnets included!! Surely I should have done something wrong.

So, by default it is creating a network load balancer and placing it across all the subnets in the VPC, private subnets included!! Surely I should have done something wrong.

Further look into the cloudformation, it was clear that all the ECS services and EFS were placed into all the subnets. I would have thought the load balancer would placed in the public subnets and rest of the resources would be in private subnets. Clear there is something wrong with my docker-compose file or the way docker-compose generate the cloudformation is not smart enough to make the public/private separation of the services.

Digging Deep

Two questions that needed answers now;

- Can we configure the load balancer to be only in public subnets?

- Can we have the ECS services and any other VPC resources (EFS etc.) to be only in private subnets?

Going back to the documentation, I found that using x-aws-loadbalancer one could specify an existing load balancer. Although that means that the load balancer is not managed by the stack as the application, it can still be a workable solution.

I was hoping to find a x-aws-subnets, but I couldn't find one anywhere in the documentation. So I turned towards Google for answers and stumbled across this github issue. Long story short, there isn't an option to specify the subnets to be used.

The Way forward

At this point, what is the way forward?

- Create a VPC with only private subnets and use it? That would work for my 'experiment', but not when I have to deploy something to a production where the VPCs are already well defined.

- Create and manage load balancer outside of docker-compose and let the application and its VPC resources span across all subnets, private and public. Again, that would work for experiments, but I doubt that setting would get the green flag to go production.

version: 3

x-aws-vpc: vpc-89768976kjdafad798

x-aws-loadbalancer: elk-app-loadbalancer-name

services:

elasticsearch:

................

.....

- Use docker-compose to generate cloudformation. Edit the cloudformation script to add or modify resource settings as needed (change the load balancer to an Application Load balancer instead of Network Load balancer, remove private subnets from load balancer configuration, remove public subnets from ECS service network configuration etc.). Once you started using the modified cloudformation, docker-compose has no role in your future updates on the same stack.

So Will I use it again?

Docker-compose ECS integration is a useful tool in your tool kit. How useful it is will depend on various factors.

- If you are playing around with some containerized apps and you want to quickly deploy it to ECS for your trials, docker-compose ECS integration provides an easy way for your deployment (provided you are okay with the subnet/ELB constraints discussed above).

- One can use it as a quick and dirty way to get cloudformation script for their solution deployment. Have the docker-compose ECS integration to generate cloudformation instead of using it as a deployment tool. Obviously the generated cloudformation would require some amount of massaging efforts to make it work with and standard VPC setup, look more human eye friendly etc. But it would still probably be faster than writing a cloudformation script from the scratch. Once started using cloudformation, docker-compose is of no use for that particular application's life cycle. Also one might want to consider if they are happy with using cloudformation. While native AWS tools enthusiasts have been moving to CDK from Cloudformation, many others prefer tools like terraform for their infrastructure code.

- Using it as a production deployment tool is highly questionable, due to the points discussed earlier. Also it doesn't have a "show the difference" feature to provide a summary of what changes are being deployed. But for small internal applications (that work within a VPC with only private subnets) it could provide a faster means to deployment. There is another point to note here though - Some private networks are setup with limited outbound connectivity, and would fail to pull images from a public image repo (like the ecs-sidecar image). Generating cloudformation, changing the sidecar image url (and other images that you are using) to an ECR repo that the VPC has access to) is probably the only option in such a case.